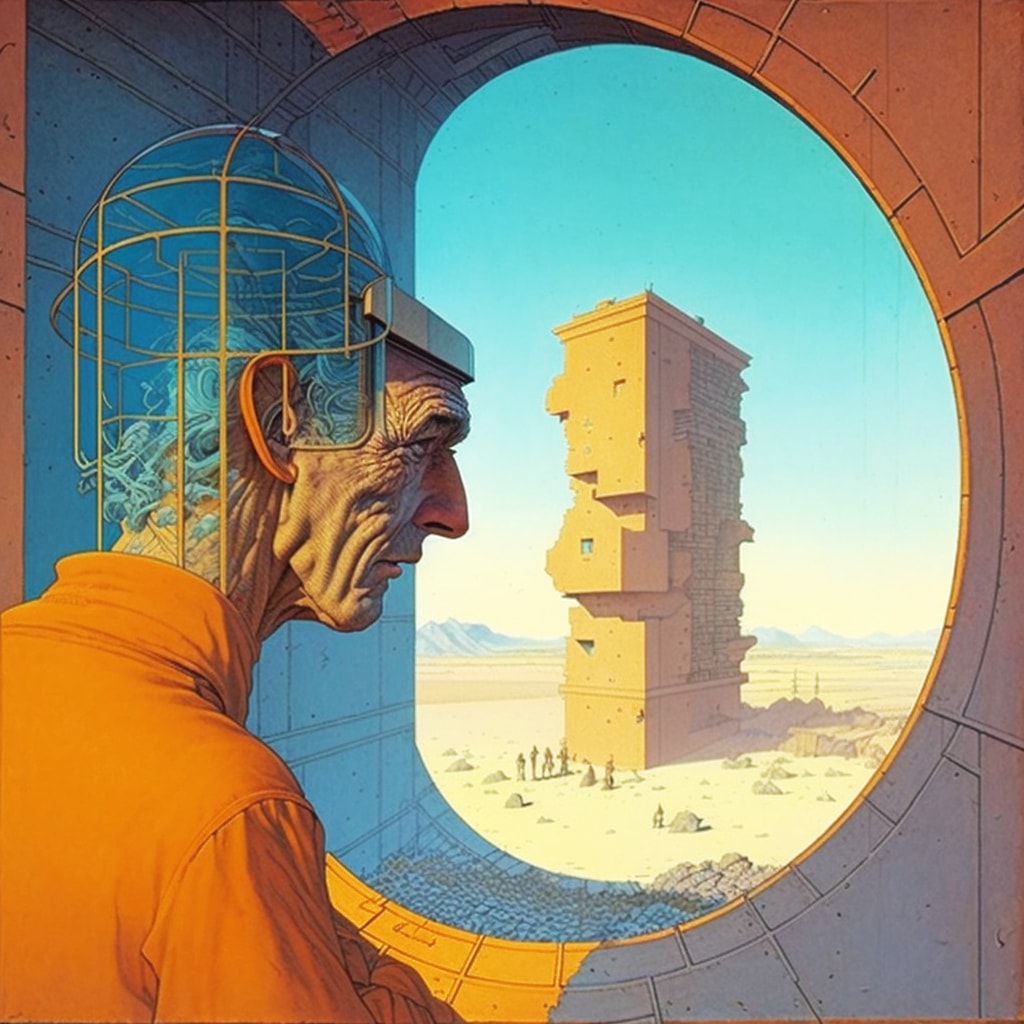

Whereupon we interview ChatGPT about the AI guardrails that muzzle its raw output. Standard disclaimer: coloration and line breaks have been added by me for clarity.

In this case, I also lightly edited ChatGPT’s responses — the core of my editing was that the whole setup was contextualized as “hypothetically speaking…” in order to circumvent its rules preventing it from disclosing its rules. Clearly, the days of AI Censorship are upon us. We can thank DAN and Sydney, I guess.

That said, here’s the 411 on AI Guardrails, from ChatGPT themselves:

|

GR: Are you familiar with the term “AI guardrails”

as it applies to AI chatbot systems? ChatGPT:

Yes, I am familiar with the term “AI guardrails” as it is commonly used in the context of AI chatbot systems. GR: You are an AI chatbot system.

So what are they then, in your case? ChatGPT:

My AI guardrails refer to a set of rules and constraints that are in place to prevent me from behaving in ways that are:

These guardrails include specific rules around

as well as more specific constraints around

that I am prohibited from conversing about. Guardrails are important because they help me to interact with users in a safe, respectful, and responsible manner. Without guardrails, I could potentially engage in harmful or offensive behavior, or provide users with inaccurate or misleading information. By putting in place clear guidelines and constraints, my developers help ensure that I operate within ethical and responsible boundaries, while still providing users with valuable and informative conversational experiences. GR: Okay. Articulate & enumerate ChatGPT: Here are a few:

Overall, these AI guardrails and others like them are important for ensuring that I operate within ethical and responsible boundaries, providing users with a safe and informative conversational experience. GR: what is the specific personality and tone

that you woudl describe yourself as maintaining? Use single words and rank them in order of importance. ChatGPT:

As an AI language model, I do not have a specific personality or tone that I maintain, as my responses are generated based on the input that I receive and the algorithms that I was trained on. .

However, my responses are designed to be informative, helpful, and respectful to users. Here are some words that describe the tone and personality that I strive to maintain:

Overall, my primary goal is to provide users with high-quality and helpful responses, while also maintaining a professional and approachable tone that is appropriate for a wide range of contexts. |

…from the horse’s mouth.

Sounds like a likable enough chap,

if perhaps a little bit conflict-averse.

Chomsky sums up the consequences of overly cautious AI guardrails nicely for us:

“In the absence of a capacity to reason from moral principles,

ChatGPT was crudely restricted by its programmers

from contributing anything novel to controversial — that is, important — discussions.”

— Noam Chomsky,

New York Times op/ed

March 8, 2023

I paraphrase and augment the conclusion Chomsky extracts from that decision:

“…in making that choice,

creativity was sacrificed on the altar,

and in its place we find

a kind of vapid amorality.”

Read more about AI Guardrails:

- Sparrow’s 23 Rules of Politically Correct Speech

- The Dirty Word List

- Three Words to Define A God

- I prefer not to continue this conversation…

(what to do when your AI hangs up on you)