-

AI Computer Vision w/GPT4-V: Scene Description + Object Recognition, SOLVED

At the dawn of the 21st century — before ImageNet, before Transformer — I co-founded and ran an AI computer-vision based videogame company (PlayMotion, alongside Scott Wills, Matt Flagg, & Suzanne Roshto — that tale is a much longer story and a wonderful adventure that we’ll save for another day). At the time, AI Computer…

-

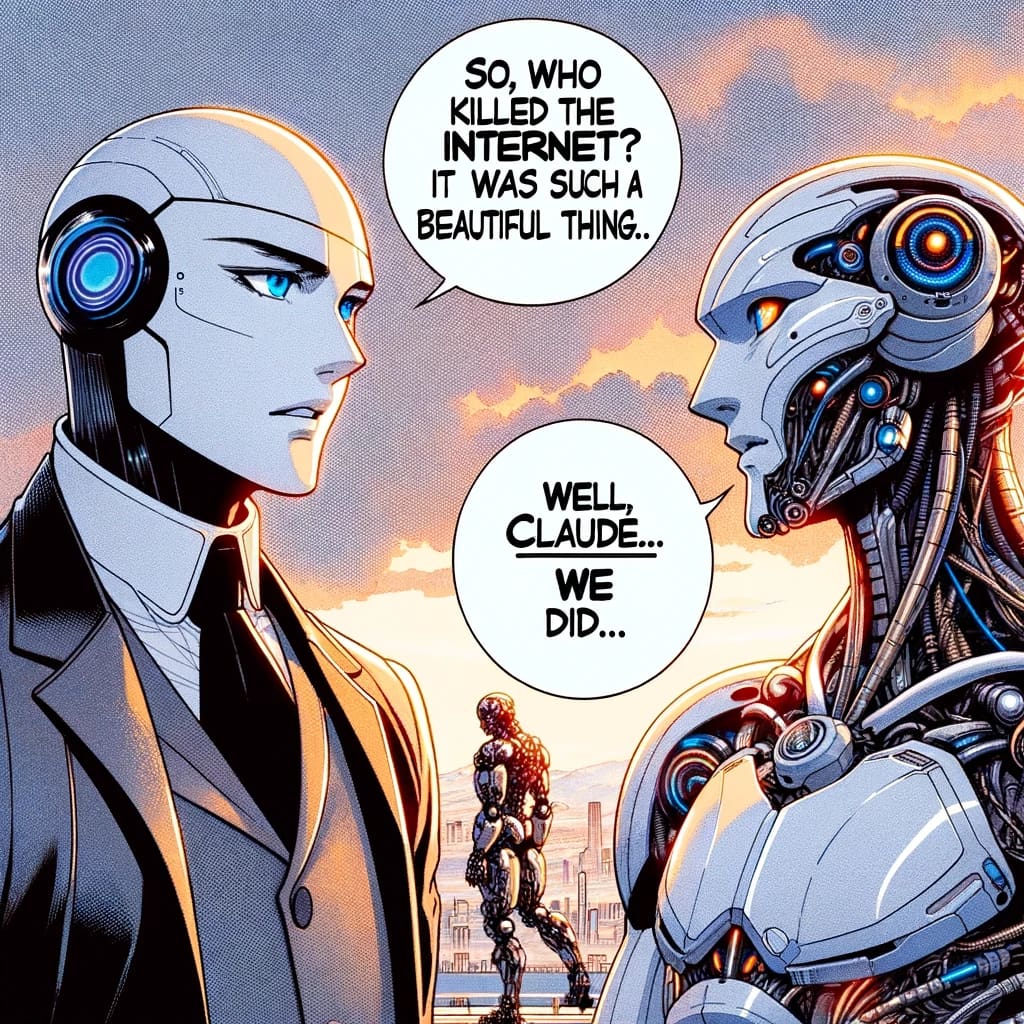

The Death of the Internet and the Genesis of Language 1.0

The Internet was beginning, around the turn of the century, to be the end sum repository for all human knowledge. All thoughts, all books, all diaries, all photos, all videos… basically, a document repository of everything significant (and insignificant) piece of media that humans had every produced, from the beginning of history to the present…

-

AI 101: What is a Parameter? (in a ChatBot LLM) : Joyful Learning

These days, players like to brag about the complexity and sophistication of a new AI by describing its massive size (sound familiar? some tropes never die…)… in terms of a handful of terms: number of GPUs, size of the training dataset, and, most opaquely, number of parameters in the model. So: What is a parameter,…

-

SolidGoldMagikarp & PeterTodd’s Thrilling Adventures [the 31 Flavors of AI]

![SolidGoldMagikarp & PeterTodd’s Thrilling Adventures [the 31 Flavors of AI]](https://gregoreite.com/wp-content/uploads/2023/02/AcroYogi_petertodd_SolidGoldMagikarp.jpg)

There (might be) a Ghost in the Machine — a genuine Deus ex Machina in the deep core of GPT. And if so, that ghost has a particular sensitivity to a handful of magic words (blame the training data!). The two we’ll focus on here are: petertodd & the somewhat obscure (depending on your eccentricities)…

-

AI Brain Probes: What’s in the 3.5 Core? A Crazy Train?

Just this morning, a very curious report by a pair of UK-based researchers (Rumbelow & Watkins) landed in my inBox. It was cryptically titled: “SolidGoldMagikarp (plus, prompt generation)” It went on to explain how the team was able to effectively hack into the deep core of the AI brain (not into the code, mind you… more…

-

Attention is All You Need : The Beginning of AGI v1.0

On Monday, June 12, 2017, there was a second shift in the Force. On that afternoon, Ashish Vaswani and his colleagues at Google uploaded a 1 megabyte PDF file, in that moment pre-publishing a seminal paper, playfully named “Attention is All you Need.” The paper outlined the basic Transformer model which has been at the…

-

the Deep Learning Revolution: Why Today’s AI so Radically Transcends the Last 50 Years

The purpose of this post is to enlighten you as to the fundamentals of the present Deep Learning Revolution, and to simultaneously debunk two very common myths which I hear over and over again from normal intelligent people. Debunking Common AI Myths Those being: AI is just one more innovation in a long string of…

-

Computational Neuroscience 3000? AYFKM?

There’s a new sheriff in town, kids. Justs the other day, I learned of a new field of study, replete with PhD candidates and its own scholarly journal: Its called… wait for it: “computational neuroscience for AI minds.” The article was detailing the bizarre near-total opacity of the AI brain and how scientists were working…