Upon further examination of the Meltdown / Coup at OpenAI, a story is starting to materialize. This isn’t about money. This is about religion. The epic battle of Safety vs Speed. This is the Jihad that’s been rocking AI frontlines for years now.

So: this was not a corporate takeover from Microsoft.

And no, this was not (on the surface) Big Money stomping on AI Utopianism. (tho, as of Sunday, it may actually turn out that way after all. Microsoft is apparently withholding Azure cloud compute from OpenAI unless they dissolve the board & re-instate Altman as CEO. Wow.)

Amazingly (and this makes me feel really GOOD inside actually) Kara Swisher is reporting that Altman was fired as an AI Safety issue: that the ex-CEO was directing the release of product too fast, product that was potentially harmful to humanity. I actually fully agree with that stance. And I can only see the “public / enterprise” front. God knows what is/was in the release pipeline (GPT5 = AGI? possible).

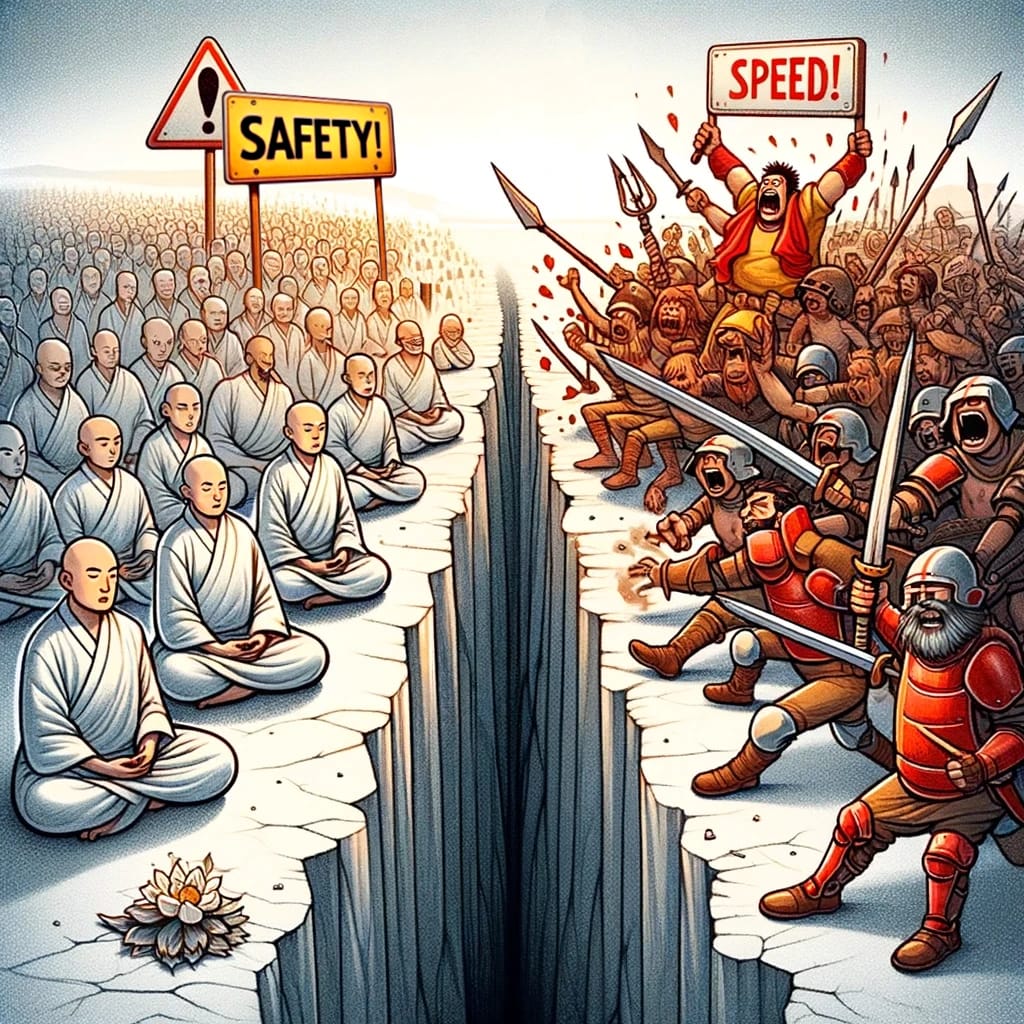

So, it appears that, at its heart (or at least this is the narrative that we’re being sold), Altman’s unceremonious decapitation was fallout from a religious war that has been boiling across the AI ecosystem for years: the ideological struggle of Safety vs Speed. “Decel” vs. r/acc (Rational Accelerationism. Yes it’s a thing) the Effective Altruists vs. the Techno-Optimists. Yudkowsky vs. Andreessen. And, as it turned out: Mira vs. Greg, and Ilya vs. Sam.

The scuttlebutt says that one of the “insider” board members (Ilya Sutskever, also one of my favorite geniuses, and fair to say the leading scientist behind today’s global AI surge) got in unresolvable arguments with Sam regarding “Safety vs Speed.” Sam wanted to launch more and more product, faster, and Ilya wanted to put in more safety measures.

These safety measures were to prevent, amongst other things, the AI synthesis of new pandemic-class pathogens: This exact experiment was recently conducted at MIT, actually: they hooked up GPT4 to a real-life genetic sequencer and it literally engineered and synthesized a potentially lethal viral agent, following an initial simple verbal prompt instruction by the human (source: Vox, June 2023).

I’m collecting a bunch of quotes that I think illustrate the depth and scope of this Safety vs Speed argument. And realise, that this very debate is how Anthropic (who many see as the only viable competitor to OpenAI) got formed — the defection of key staff who felt Sam was pushing too hard with disregard to safety — and yesterday, the entire reason that 4 people decided to effectively hit the self-destruct button on a company valued north of $80 billion.

Not a Big Tech Company, something better

“I continue to think that work towards general AI / AGI (with Safety top of mind) is both important and underappreciated… There is no outcome where [OpenAI] is one of the big five technology companies [alongside Apple, Alphabet, Amazon, Meta, and Microsoft]… This organization is something that’s fundamentally different, and my hope is that we can do a lot more good for the world than just become another corporation that gets big.“

— Adam d’Angelo

Forbes interview

Jan 2019

Ilya’s angle on the Takeover:

Q: “was this is coup? is this a hostile takeover?” (asked at the OpenAI all-hands meeting Friday afternoon, in response to the news that the board had sacked Altman)

A: “You can call it this way. And I can understand why you chose this word, but I disagree with this. This was the board doing its duty to the mission of the nonprofit, which is to make sure that OpenAI builds AGI that benefits all of humanity.”

— Ilya Suskever

speaking at all-hands meeting

11/17/2023

Mira’s angle:

[we must remain true to] “our mission and ability to develop safe and beneficial AGI together… we have three pillars guiding our decisions and actions:

-

- maximally advancing our research plan, our safety and alignment work

- particularly our ability to scientifically predict capabilities and risks, and

- sharing our technology with the world in ways that are beneficial to all.”

— Mira Murati

memo to OpenAI employees

11/17/2023

Altman infers that OpenAI may have achieved true AGI

At a major event on the eve of his firing, Altman was on stage, and his comments, about a prototype he had seen in the past month, infer that OpenAI / GPT5 may actually have achieved wide open AGI — Artificial General Intelligence. This idea, in the characterization of @AISafetyMemes (and legendary Shoggoth illustrator), supports the “Ilya pushed the AGI Panic Button” theory of Altman’s ousting:

1/n Breaking News! OpenAI has uncovered an emergent new cognitive capability, yet nobody is demanding answers! We are distracted by OpenAI governance politics and not the real issue!!! pic.twitter.com/T2ZGLQp5ts

— Carlos E. Perez (@IntuitMachine) November 19, 2023

APEC, San Francisco

11/16/2023

The OpenAI Charter explicitly prioritizes Safety vs Speed, Humanity over Profit

The following is from the OpenAI website, Read it yourself, and decide where it falls along the Safety vs Speed spectrum (highlighting & emphasis is my own):

Our structure

We designed OpenAI’s structure—a partnership between our original Nonprofit and a new capped profit arm—as a chassis for OpenAI’s mission: to build artificial general intelligence (AGI) that is safe and benefits all of humanity.

…While investors typically seek financial returns, we saw a path to aligning their motives with our mission. We achieved this innovation with a few key economic and governance provisions:

-

- First, the for-profit subsidiary is fully controlled by the OpenAI Nonprofit. We enacted this by having the Nonprofit wholly own and control a manager entity (OpenAI GP LLC) that has the power to control and govern the for-profit subsidiary.

- Second, because the board is still the board of a Nonprofit, each director must perform their fiduciary duties in furtherance of its mission—safe AGI that is broadly beneficial. While the for-profit subsidiary is permitted to make and distribute profit, it is subject to this mission. The Nonprofit’s principal beneficiary is humanity, not OpenAI investors.

- Third, the board remains majority independent. Independent directors do not hold equity in OpenAI. Even OpenAI’s CEO, Sam Altman, does not hold equity directly. His only interest is indirectly through a Y Combinator investment fund that made a small investment in OpenAI before he was full-time.

- Fourth, profit allocated to investors and employees, including Microsoft, is capped. All residual value created above and beyond the cap will be returned to the Nonprofit for the benefit of humanity.

- Fifth, the board determines when we’ve attained AGI. Again, by AGI we mean a highly autonomous system that outperforms humans at most economically valuable work. Such a system is excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.

It is a testament to Altman’s savvy and people skills that, under such a charter (which he co-authored), they could still secure well north of $10B in (capitalist) funding.

The page goes even further, with a foreboding graphical note to investors stating that they should consider their “investment in the spirit of a donation” regarding a “post-AGI” world, where, effectively, the Safety vs Speed argument becomes a moot point:

That strikes me as near socialist, certainly not capitalist.

How do you read it?

prompt: “please draw this image: On the left are a bunch of monks wearing white robes seated in meditation posture, with a large sign at top left reads “Safety!”. On the right are a bunch of rowdy warriors in red armor, brandishing swords and spears, waving a large sign at top right that reads “Speed!”. a deep chasm separates the two sides. square format”

engine: DALL-E3, OpenAI