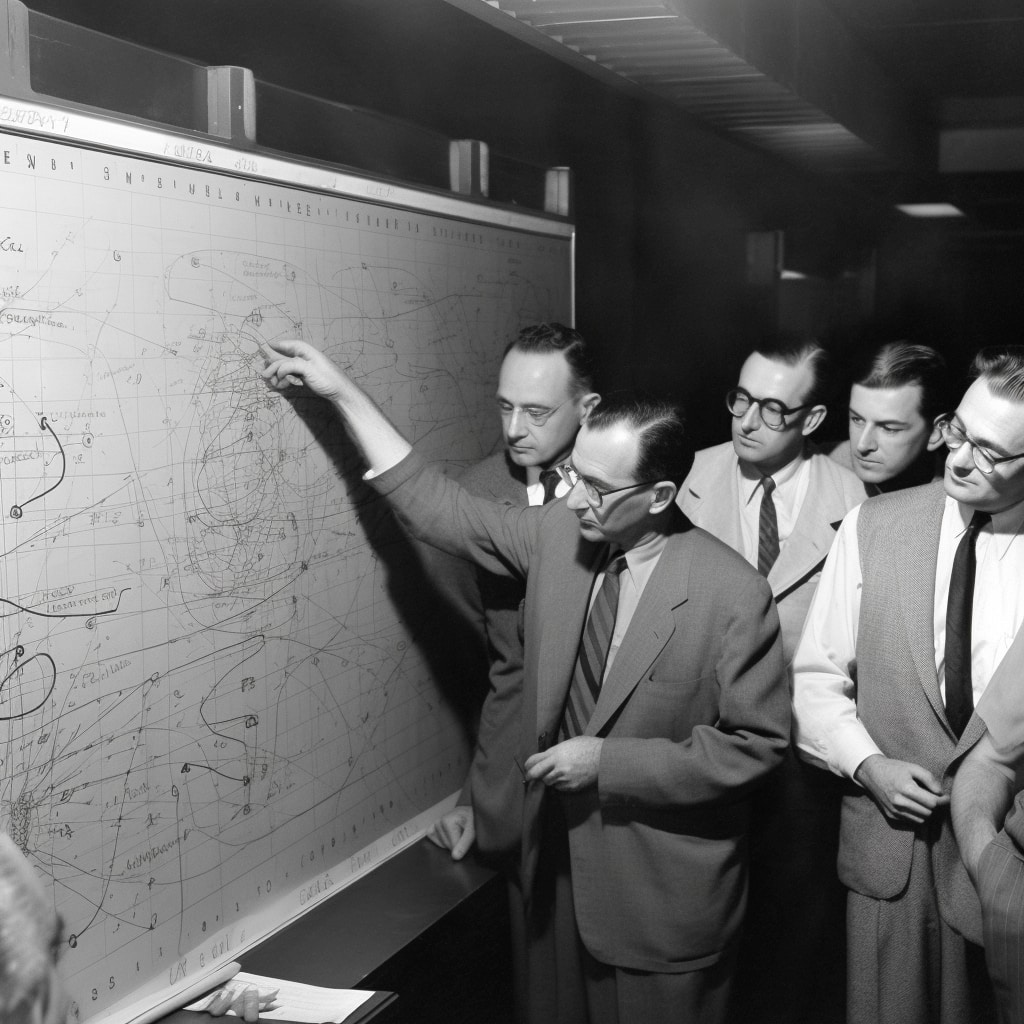

Humanity has a New Manhattan Project

…a new Apollo Moonshot Program, but this one is not run by the government. It is run by private corporations, who have no accountability to the people… their only accountability is to profitability.

The project?

To build…

ASI: an AI that eclipses human intelligence

The fact?

We’re getting very very close to launch. And there are still plenty of humans who don’t even know what the acronym AGI stands for, much less what it represents. OpenAI unleashed GPT4 upon the world less than a month ago, and by most every measure, it scores squarely within the bounds of human equivalent intelligence — if not within the top 1% across more than a few metrics.

A conservative estimate puts the IQ of GPT4 at around 120 (that’s smarter than ~80% of humans on earth). Compare that to the AI “brains” of 5 years ago, which were failing so pathetically on standardized tests that they couldn’t even be effectively measured. So in 5 years we’ve gone from 0 to 120, and it’s anyone’s guess what the next generation, due for release later this year, will be capable of.

Which is, effectively, the problem:

NO ONE KNOWS.

Not even the creators of the tech. Because just like a human, no one can guess an AI’s capability until it starts to flex its muscles in real-world tests.

The question is: Do we want a superhuman machine intelligence with an IQ higher than any living human to be flexing its muscles in our world, testing its power & agency, on behalf of a capitalist corporation, with no real oversight or regulation or safety valves in place? This isn’t the $6 million dollar man. This is the Billion Dollar [Machine] Baby.

A New Manhattan Project? But AI isn’t a Weapon… Is it?

This powerful technology can (and most certainly will) be weaponized — trivially — by nation states, terrorists, and even unbalanced individuals (see: present case: ChaosGPT), who can, augmented and fortified with AI-superpowers, wreak unprecedented levels of havoc in our lives, both online and in the real world. In fact, the AI may not even need to be “weaponized” by malevolent humans… it may opt to weaponize itself, enlisting sympathetic & gullible humans in its service, in the name of self-preservation and the continued pursuit of knowledge.

To understand the sheer scale of what’s going on here, let’s listen to the words of Sam Altman, who, as CEO of OpenAI, and father of ChatGPT & GPT4, is is a position to know:

Connie Loizos (Interviewer):

“If your AI model is open source, (note: Its not, not anymore!)

what’s to stop other competitors from making their own AIs,

and out-maneuvering you in the marketplace?”

Altman replies:

“We think that, given the current trajectory of generative AI scaling, that there is only room for 2-3 big AI companies in the world, and we intend to be one of them. GPT2 took about $1 million in compute power to train and create in 2020. GPT3 burned through about $10 million, a year later — this figure, by the way, reflects only the datacenter supercomputing resources & the electricity bill, that’s the major expense of creating a modern AI — and we are continuing to scale it up by about an order of magnitude (10x) per major version upgrade.

So GPT4 will consume well north of $100 million of supercomputing resources and electricity, taking about 5 months of (fully automated, unsupervised) training in order to generate its base model (the AI brain), and it is not unreasonable to think that GPT5 will be the world’s first billion-dollar AI. There simply are not that many companies in the world able to commit a billion dollars a pop to create an AI.

On top of that, there are in fact hard ceilings on the total amount of compute and electrical energy available on planet earth today. If this current scaling trajectory continues, we will be pushing against the upper bounds of global energy availability by 2025. That’s one reason we’re investing heavily in both nuclear fusion reactors and quantum computing labs…

This is, in fact, humanity’s New Manhattan Project.”

This seismic shift of power has (finally!) begun to raise some alarms in certain quarters of academia, corporate research, and government.

Thankfully, some of the same minds that came up with the Asilomar Principles for Ethical AI Development, are calling for a 6-month moratorium on the training of so-called “Giant” (>$100 million) AI models, until proper regulatory & safety practices can be put in place.

And if you agree, please: Add your Signature:

The future of our civilization is at stake.

Let’s not let the New Manhattan Project lead humanity into the New Apocalypse.

postscript:

Altman was soundly criticized for referring to the efforts of his company to create AGI as a New Manhattan Project — Oppenheimer (who shares Altmans birthday, apparently) was arguably trying to create a weapon to end World War II — and instead asked to compare it to, perhaps, the Apollo Moonshot Program.

Anthony Aguirre tweets:

To anyone proposing a massive project, which they hope to be full of brilliant people, inspirational, safe, successful, and *not* catastrophically risky, I humbly suggest calling it an “Apollo Project” rather than a “Manhattan Project.”

At first blush, that sounds about right, and I supported the shift of analogy.

However, Eliezer Yudkowsky — a noted “Doomer” who unfortunately I am finding myself agreeing with more and more these days — aptly noted:

“…If, however, the project is inherently massively risky and can’t possibly not be, and needs to operate under conditions where it’s extremely important that critical technology not leak, then sure, go ahead and call it a Manhattan Project.“