That same general purpose AI that mastered — totally mastered — the “logical” game of chess, has now been directed at a far hairier, more analog, more “human” challenge: the fine arts, including drawing, painting, photography, illustration, and yes — even sculpture and its digital cousin, 3d modeling. Although it goes by many names, most of us just call it what it is: AI Art.

The Primitive Origins of AI Art

Now, to be clear, primitive “artbots” have been around for more than 3 decades. An excellent period example is Harold Cohen’s “AI” Artbot AARON, which was outputting fully original, fully automated artworks of ink on paper as early as the 1980s:

But their results, while amusing, were never more than curiosities. The closest modern analog would be the now ubiquitous “filters” that one can find in just about any social media or photography app.

While these do have intelligence — they first use computer vision to detect facial features — eyes, nose, mouth, hair — and then selectively morph those based on vector maps — they are not truly generative per se.

But this next generation of AI Art did away with the filters, even did away with the need for source material, canned effects, and linearity… and instead, took a genuinely novel approach, straight from the research labs.

First, there was Computer Vision

Much work had been done in the field of computer vision in the past 30 years, which began effectively with facial recognition, and matured into what is known as “scene understanding.” Scene understanding trains a computer to analyze a drawing or photograph, and to describe what it sees in a simple sentence. For instance, it would see a picture and say “two humans playing tennis in front of a large stadium crowd.” Or “a tall blond woman in a business suit rolls a luggage cart through a busy airport.”

This technology evolved slowly and in spurts, the work could perhaps be seen as a precursor to modern AI systems : indeed, both CV and ML (Computer Vision and Machine Learning) disciplines pioneered much of the algorithmic and hardware specialization that led directly to today’s GP AI. Well, those disciplines, plus the massive graphics and computational and speed requirements of videogames and cryptocurrency, which more than anything else pushed the frontiers of GPU development.

And then, once again, once modern AI was “aimed” at it, in 2015-ish, the problem became effectively solved (there are still a great number of “exception” cases that confuse the AI, but it is of note that there are also thousands of situations, mirages, mirrors and optical illusions that confound even smart human observers as well).

So, late in 2015, some curious scientists (notable amongst them Elman Mansimov) thought:

Eureka! Turning Sight into Synthesis

“If we have trained the AI to see, and to generate common language descriptions of any given photograph or drawing, why can’t we simply invert the process, and instruct the AI Art Engine to synthesize a visual representation of any written idea (or “prompt”) that we feed it?”

It really didn’t take much. They flipped the neural net upside down, swapped the inputs for the outputs, and hit “go!”… not having much hope it would work, but then again… why not?

Well, hot damn!

It pretty much worked, from the go:

“Green Schoolbus 32×32” c.2015

[the first known AI-art “generative image”]

credit: Mansimov, et.al. University of Toronto

That was November 2015.

Generative AI Evolution

By July of 2022, the engines had been given a web interface, and any net-connected human on planet earth could type in a prompt, tap into the massive supercompute resources powering today’s frontier AI Art models, and be delivered a custom artwork representation of their words… in less than 60 seconds. In those 7 years, the AI had learned… well, a few tricks:

“AI, render me a green schoolbus.”

image © DALL-E via Vox Media, 2022

What is AI Art? An Orientation Video

Vox Media has created an excellent video recounting how this AI Art revolution began, and some of the underpinnings of how the underlying AI-Art “technology” works:

It created, flawlessly mimicing any style…

(Monet, Davinci, Picasso, Gustav Klimt, Android Jones…)

…at any resolution & aspect ratio

(pocket sized 256×256 icon squares… giant sized 8k 16:9 cinematic masterpieces)

…with any camera lens

(specify, for instance f1.8 50mm with narrow depth of field, bokeh)

…with any lighting, any background, any color pallet…

the only limit is your imagination.

/imagine: [prompt]

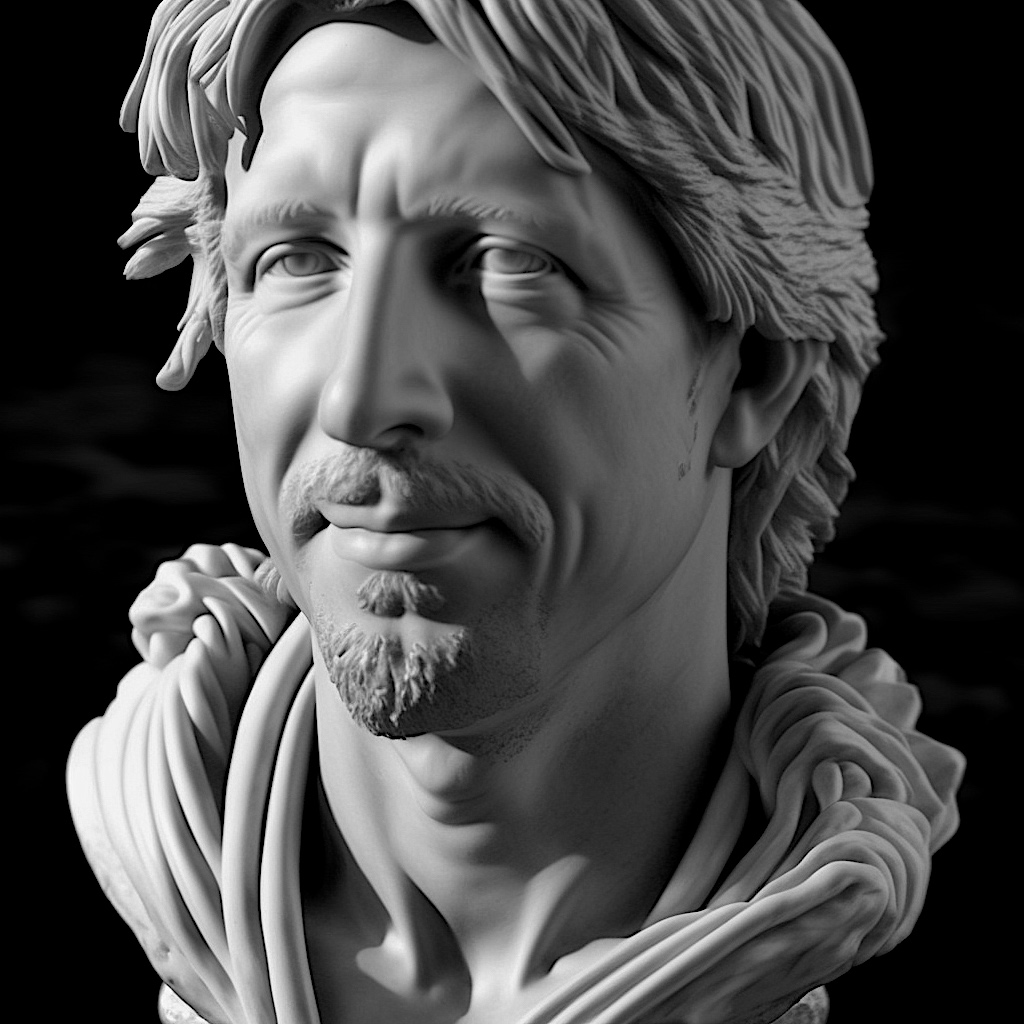

“AI, create for me a memorial carved marble bust of the Dread Pirate Roberts, RIP”

@phoenix.a.i

(…so now you understand how the “sculpture” headbust at the top of this page was synthesized. Prompt: “carved marble sculpture of Gregory Roberts.” In 60 seconds flat. For that matter, every single title illustration on this entire site was created using that same process.)

What shocked almost everyone, yours truly included, is that….

The AI Art is not without challenges.

In particular, it struggles with two key human concepts:

- Accurately rendering properly articulated Hands (mudras)

[UPDATE 2023.09 — MidJourney v6 effectively solves human hands, and full 5-finger articulation. See AI art calibration datasheet]

- Spontaneously Rendering Legible Text, and…

[UPDATE 2023.11 — DALL-E v3 effectively solves text rendering, in almost every font and color and size imaginable. mind-blowing.]

(to be continued…)

Related Pages:

- AI Art Calibration Targets: 2022-2023

- How is AI Art made? from concept to page

- The Problem with Plagiarism

Related Memes : AI Art

- Diffusion Engines

- Text-to-Image

- Android Jones

- NFT Flood

AI Art Generators / Create AI Art:

- Stable Diffusion [SD]

- MidJourney [MJ]

- DALL-E

- Lensa App