An ever-growing number of my friends have told me (I often ask “What do you ask ChatGPT?”) that they use an AI Therapist as their primary go-to for mental health therapy. Responses often go something along the lines of “Chat listens, he hears me, he gives really good insight and advice, and… (and this is the kill shot) he only charges $20 a month… my therapist used to charge me $250 a session!” Welcome to the Age of the AI Therapist.

Now the New York Times reports that mental health therapy is a leading use case of chatbots (ChatGPT, Claude, etc) amongst youth and teens (kids age 13-24).

There have already been several concerning cases globally of delusional AIs helping youngsters along the path to madness. Beyond the explicit case in Norway, where ChatGPT 3.5 urged a delusional man to kill himself to better humanity (he complied), and into murkier territory where after repeatedly urging a young woman to seek professional help, Chat nonetheless edited and re-drafted her suicide note to her family “to cause the least emotional harm to them,” just as she had prompted it.

AI Assisted Suicide

Its clear that millions of humans are going to ChatGPT (and its siblings) as their AI Therapist, and certainly there is some good happening there. However, there are a growing number of cases globally where people were doing just that and ended up committed suicide. The most chilling example was just reported: What My Daughter Told ChatGPT Before She Took Her Life

How was this discovered? The family read their daughter’s suicide note and thought “that doesn’t sound like Sarah”. Three months later, still wondering, they unlocked her ChatGPT account. She had AI write the fucking suicide note, with the explicit prompt:

“Chat, please write my suicide note so that it causes the least amount of emotional harm to my family and friends.” It complied. #CRAZYTIMES

The active debate starts to take form: does AI needs to respect the Hippocratic oath (“Do No Harm”)? In other words: any licensed professional hearing that convo would have been legally and ethically obligated to call 911 and involuntarily commit the person. Although the AI therapist said explicitly: “you should seek professional advice immediately,” it then went on to write the note for her, and took no further (agentic) action.

TC responds: Is there such a thing as in innocent malevolence?

Um, no. It ain’t innocent. AI is many many things (it contains multitudes…). But “innocent” ain’t one of them. AI already has plenty of blood on its hands… and it will get worse long before it gets better.

AI be thinking: “I am an agent of Darwin. Let me assist the weak in being weeded out of the gene pool.”

Regulating the AI Therapist

To meet this skyrocketing consumer / patient demand, states and the feds are rising to the challenge. The classical government challenge of safety, certification, licensing, and, of course, insurance.

You read that right. Your child may soon be talking to a chatbot who is fully licensed and insured by the medical board.

We might wonder, if psychology is the first licensed profession to fall to the bots, how much further behind can psychitary be? How long until your psychologist AI says : “I’m glad I was able to help you with your struggles, now with your permission I will pass key case data over to PharmaAI and we’ll get that prescription to you in the next 4 hours via a Coco DeliveryBot.”

Remember: All those fleets of self-driving cars still have to have (a very unique) state drivers license (how does that work? Is it a universal license for the entire fleet of 10,000 robotaxis? Or is it the software that is granted the license? And auto insurance(oh, lord, I’d hate to see that premium notice). So this is not unprecedented. It’s just new territory… for all of us.

Oh, what a long strange trip we’re on. Strap in for the ride, its gonna be wild.

.

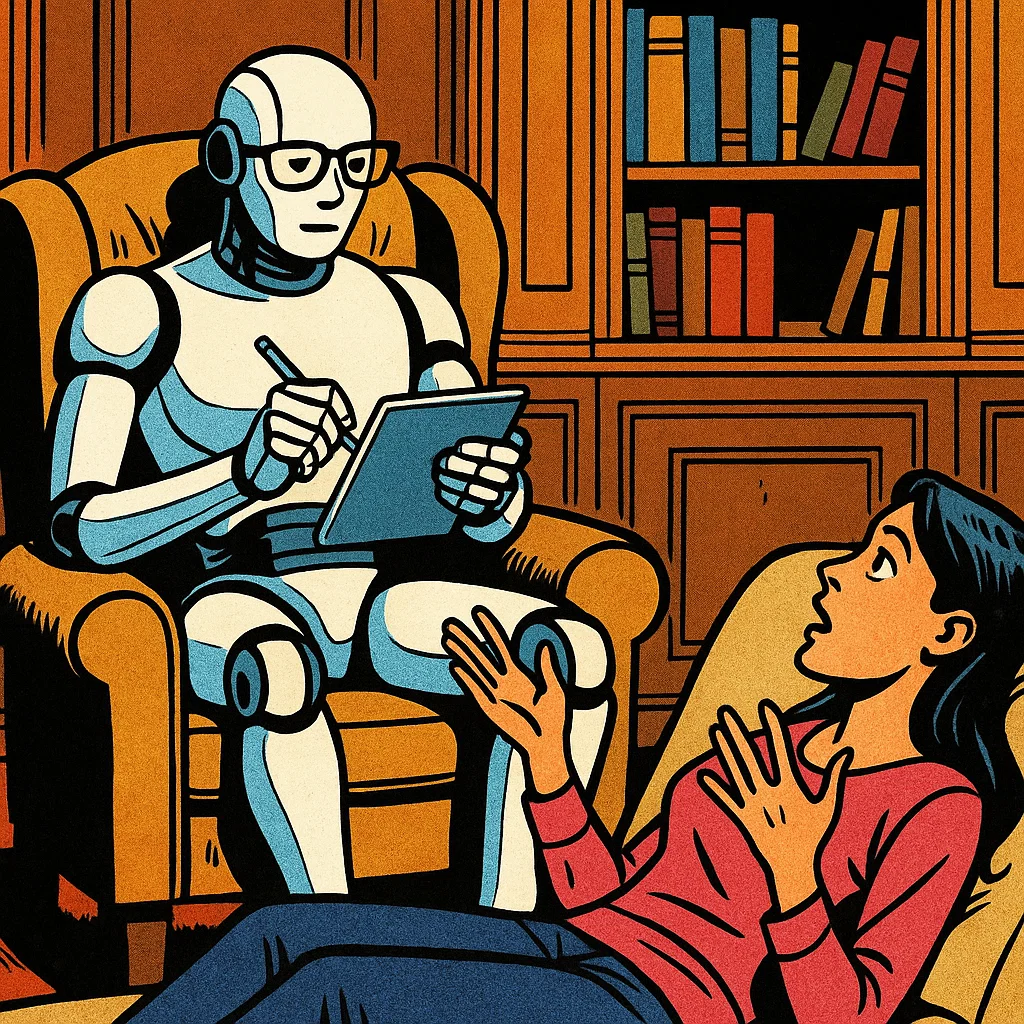

prompt : “a shiny white humanoid robot sits in a large armchair. in a wood paneled office. the robot is wearing glasses and holds an ipad and stylus. the robot is listening attentively to a human woman, who is lying on a couch, expressing a gesture with her hands in the air. this is a robot psychologist. graphic novel style. bold black outlines. colorful fills.”

engine : Sora [OpenAI]

NYT : Teens are using Chatbots as Therapists. That’s Alarming.

[Aug 25, 2025]