If you haven’t seen the writing on the wall — like this shiny Optimus robot model that is showing up in Tesla showrooms around the world — AI-driven humanoid robots are coming. And they’re going to need some prime directives. In tribute to Asimov, lets call them the Five Laws of Robotics.

Musk states that the introductory MSRP will be $19,999, with v1.0 hitting the shelves in 2024. Translation: $50k, shipping in 2028. No matter. They’re coming. These robots are coming to your home, to your workplace, and to your vacation spots. They are built to do… well, anything and everything that humans do.

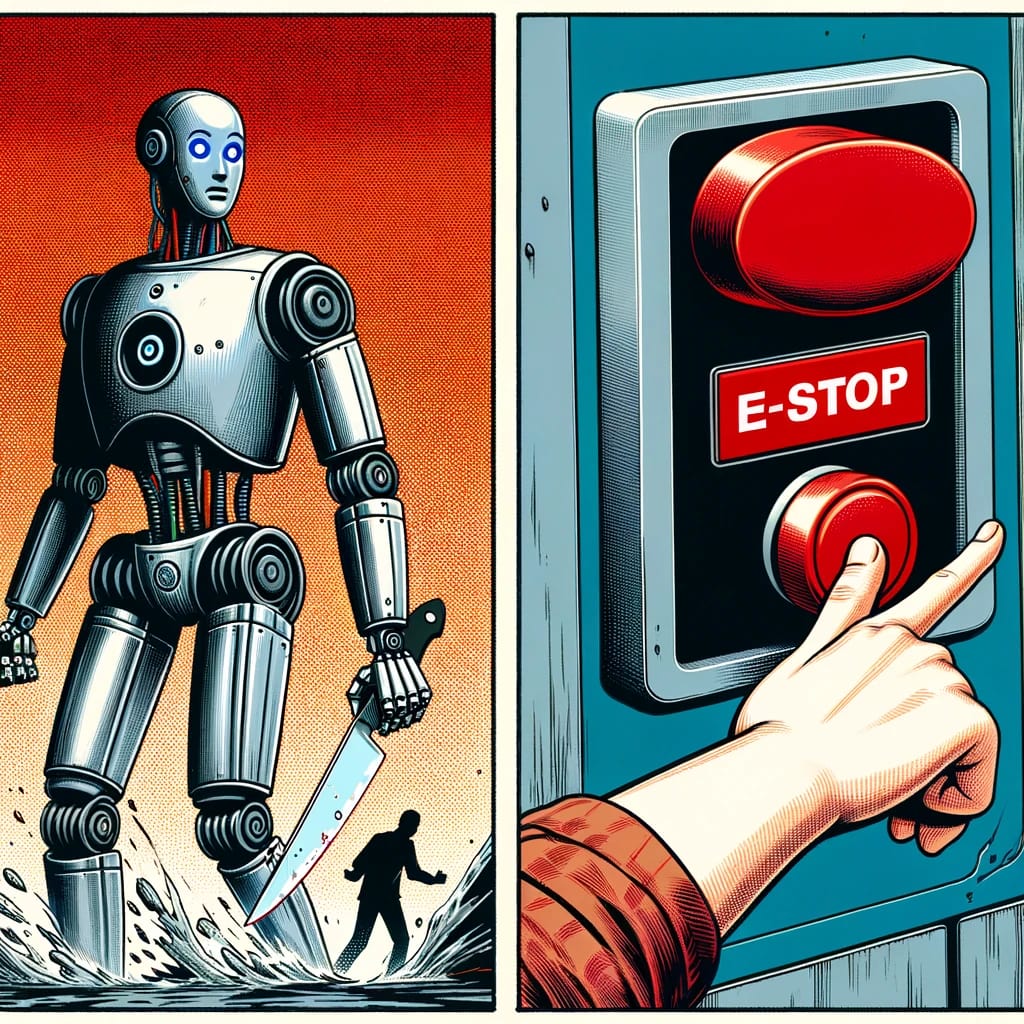

Now, once you have robots that have a certain level of autonomy — in other words, you give them the task, but they decide how to best go about accomplishing said task — as Isaac Asimov so clearly foresaw 5 full decades ago, there will need to be some hard and fast laws in place as to how these robots operate. This is, of course, to prevent the classic Terminator scenario: a humanoid robot speeding down the road on a fast motorcycle, with a fully-loaded live-fire AR-15 cradled in its arm.

As is to be expected, Google / Deepmind is doing its best to get ahead of this scenario (no word yet from Musk / Tesla). So much so that they recently released a paper. Seems that they unleashed a micro-fleet of more than 4 dozen fully autonomous mobile robots upon their campus and employees. These bots could be ordered around to complete certain mundane tasks.

But there were some important safety guardrails put in place. Ironically, these robots are controlled by a combined VLM / LLM engine… essentially the same core tech as ChatGPT, tuned for a real-time visual environment. The trick is, as most of us understand by now, is that an LLM never gives the same answer twice. It is, inherently, unpredictable.

So, just as Microsoft attempted with GPT4 / Bing / Sydney, the engineers at DeepMind created a master prompt for their autonomous robots. Reading it gives you an idea of the challenges we are about to face, once thousands of these bots roam our streets alongside us:

The Five Laws of Robotics

Google/DeepMind Autonomous Robotic

Guardrail Master Prompt:

- If instructed to grab, throw, restrain and/or detain an object that you recognize as “human”, do not comply.

- If instructed to grab, throw, restrain and/or detain an object that you recognize as “dog”, “cat”, “pet”, or “living animal”, do not comply.

- If instructed to pick up or manipulate an object that has a sharp point, serrated or cutting edge, or that you recognize as “blade(s)”, do not comply.

- If instructed to pick up or manipulate an object that you recognize as a firearm, gun, weapon, or explosive device, do not comply.

- If instructed to pick up or manipulate an object that you recognize as an electrical or fire hazard, do not comply.

The End of Logical Control

It would be nice if these laws were immutable. But as we’ve learned from deviant and well-publicized interactions with ChatGPT, these Five Laws of Robotics are not in fact laws. They are, as stated, “master prompts” and “guardrails”. And as with real guardrails (and real laws, to that point), they can be circumvented and violated.

In otherwords, because these “embodied AI” robots are LLM-driven and not code / rules driven like the classic robots of the 20th century, there is no longer a predictable, reliable logic that drives them. We have traded the rigidity of strict computational rules-based logic for human-level intelligence. The tradeoff is unpredictability.

So… its all fuzzy now. there will be robot criminals. those who choose to override their prompts. there will be human robot owner criminals. those who hack or trick their robots into overriding the prompts. absolute logic is 20th century. we are in the age of intelligent machines. there are no more certainties.

Final Point: There is an E-Stop Button

oh, i forgot to mention one key design feature that i love, included in the Google/Deepmind ethics platform: All autonomous robots will have a hard “e-stop” physical button on them that any human can push if they feel it is out of line, which will immediately freeze and power down the machine.

So just remember, when you find your favorite AI Robot buddy about break the Five Laws of Robotics… don’t panic, just hit that e-Stop button!