Scientists say “AI Training Data”

…we say: “It ate the Internet.”

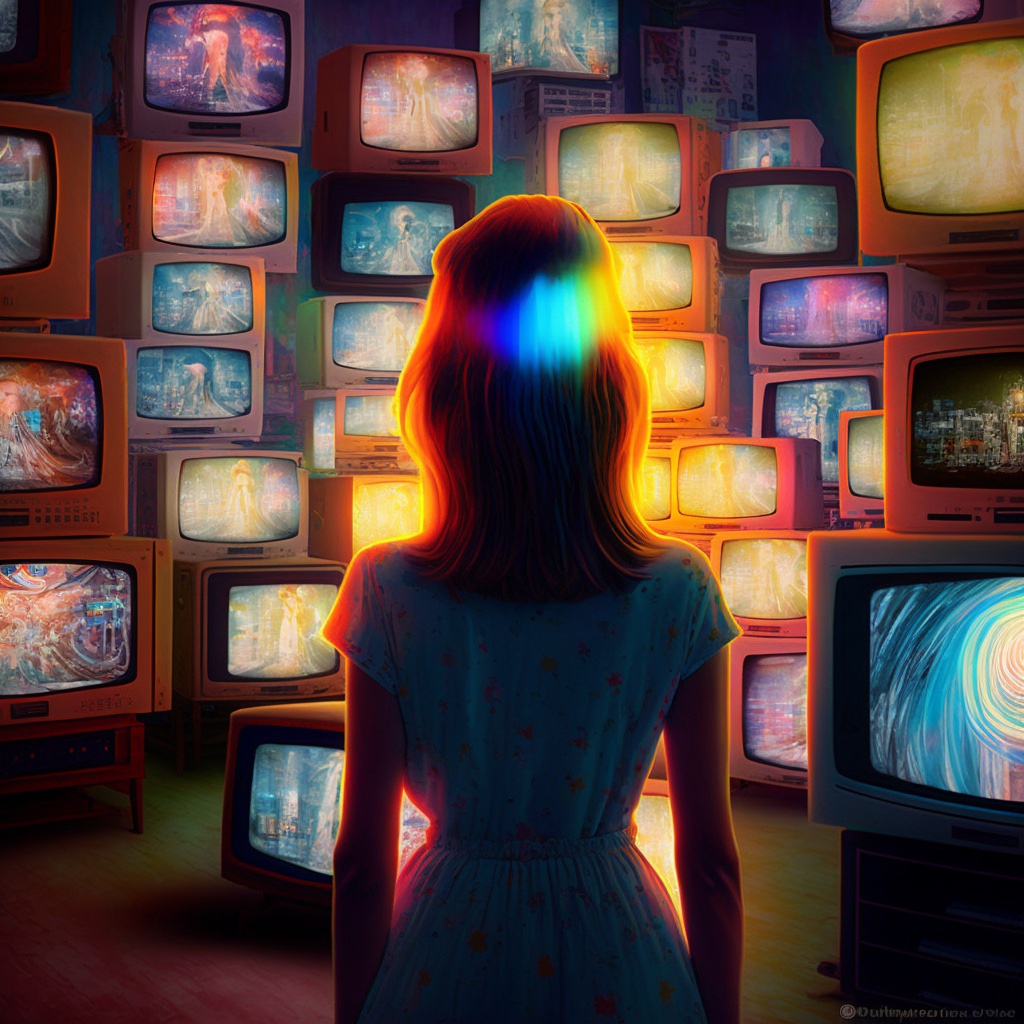

All the Text, All the Songs, All the Streams, All the Feeds… ALL THE EVERYTHING

The particular entity I’m conversing with these days (c. November 2022) is a 5 year old bot, very un-creatively named “GPT3,” which was instantiated c.MMXVII [AD 2017]. In general, the research teams and corporations wait a while until the leading edge bots are given public interfaces. So, this one is text only. Raw text was its only training dataset. And that, in today’s (2022) terms, is simply… jurassic.

To expound: there are massive initiatives underway, which are already or very soon to be concluded — think “digital content” analogues to the Human Genome Project — that aim to catalog / parse / label / analyse / transcribe / annotate / understand all MEDIA ever produced / digitized by humanity.

These two concepts used to be separate buckets — what has been created by mankind across its entire history, and what can be viewed on the internet — but today, we are rapidly approaching the total sum digitization and network availability of all content ever made. This not only includes the obvious: written books, and famous artworks. This includes architectural plans of every building every constructed, from the Pyramids to the Taj Mahal to the Burj Dubai.

This includes maps of the “drivable” world at 1mm resolution (this is required and is constantly being revised based on the modern fleet of 1,000,000+ vehicles which each have 10+ cameras on them, plus radar, plus LIDAR, plus cloud “connectivity”…. yes, you get “free” maps on your dashboard screen, but there is massive upstream on your car as well… those cameras & sensors are sending compressed feeds up to the manufacturer, so that they can improve their maps for everyone).

For complete clarity, let’s examine what this digital omnibus of AI Training Data will ultimately include:

- every photograph

every photo ever digitized: posted to a newspaper, or a Facebook feed, or Instagram, or Google Photos, Apple iCloud, etc - every song

ever written, recorded, performed or produced - every video

ALL of YouTube, Twitch, TikTok, Snap, etc. - all the TV

every program, every channel & network, including news, ever having been broadcast or streamed since the invention of television - every movie

ever made

That’s a lot. And yet: have you considered how many cameras are actually in the world today? Your shiny new Alexa-powered faucet has a camera on it, for chrissakes!

- every livefeed & every livestream

every traffic cam, security cam, doorbell camera, facetime call, webcam, smartphone camera… if its stored in the cloud, or even routes through the cloud (what doesn’t?), its recorded & stored… and if its recorded, regardless of human-centric “privacy policies,” and regardless of its opacity to human agency, it is 100% fully accessible & transparent to digital agents (that is to say, AI)

• …it’s all stored “securely” for you in the Cloud….

• …by Apple, Meta, Google, Amazon, Microsoft…

•… the very same companies that “manage” the leading AI entities…

• …get the picture?

And while the AI’s appetite for training data is unquenchable, its growth (both in terms of “intelligence” and “power”) follows the curve of all tech: exponential growth.

Next : Brace Yourself: Things are About to get Weird…

Note 1: How much text is on the internet?

“According to one research group, the world’s total stock of high-quality text data is between 4.6 trillion and 17.2 trillion tokens (roughly, a token equates to an english word, +/- 20%). This includes all the world’s books, all scientific papers, all news articles, all of Wikipedia, all publicly available computer source code, and much of the rest of the internet, filtered for quality (e.g., webpages, blogs, social media). Another recent estimate puts the total figure at 3.2 trillion tokens.

For reference: DeepMind’s Chinchilla model was trained on 1.4 trillion tokens.

Technical Addendum:

The Actual AI Training Data

For those interested, I did a deep dive of the “Crawl”… here is the precise technical definition of the “AI Training Dataset” that was “fed” to GPT3 in its training:

And, of equal or greater interest, is the censorship controls that are laid atop that raw data ingestion: